New York State has approved one of the most direct regulatory interventions yet aimed at how teenagers interact with social media.

The new law requires warning labels for minors on platforms that use design features linked to compulsive use and mental health stress, marking a shift from voluntary safeguards toward enforceable public health regulation.

Legislative approval came in June 2025, followed by final authorization from the governor’s office in December. Attention now shifts to the regulatory phase, which will determine timing, enforcement mechanics, and operational impact for technology companies.

Why Lawmakers Targeted Platform Design

Policy architects behind the law focused less on content and more on structure. Research by the U.S. Surgeon General’s Advisory cited during hearings pointed to design choices that encourage prolonged engagement, repeated checking, and reward-seeking behavior among adolescents.

Public testimony from pediatric psychiatrists and school administrators described rising rates of anxiety, sleep disruption, and attention difficulties among teens who spend several hours per day on algorithm-driven platforms.

State officials framed the issue as an environmental exposure rather than a matter of individual self-control.

Features Covered By The Law

Platforms fall under the requirement when services used by minors rely on engagement-accelerating mechanisms. Legislative language and sponsor guidance highlight several features viewed as higher risk for adolescent users.

- Autoplay video sequences that remove natural stopping points

- Infinite scrolling feeds that extend content without the user’s decision

- Algorithm-based ranking systems optimized for engagement duration

- Visible engagement metrics such as likes and reaction counts

- High-frequency push notifications are designed to draw repeat visits

Any platform accessed by a user under 18 while physically located in New York must comply, regardless of corporate location or registration jurisdiction.

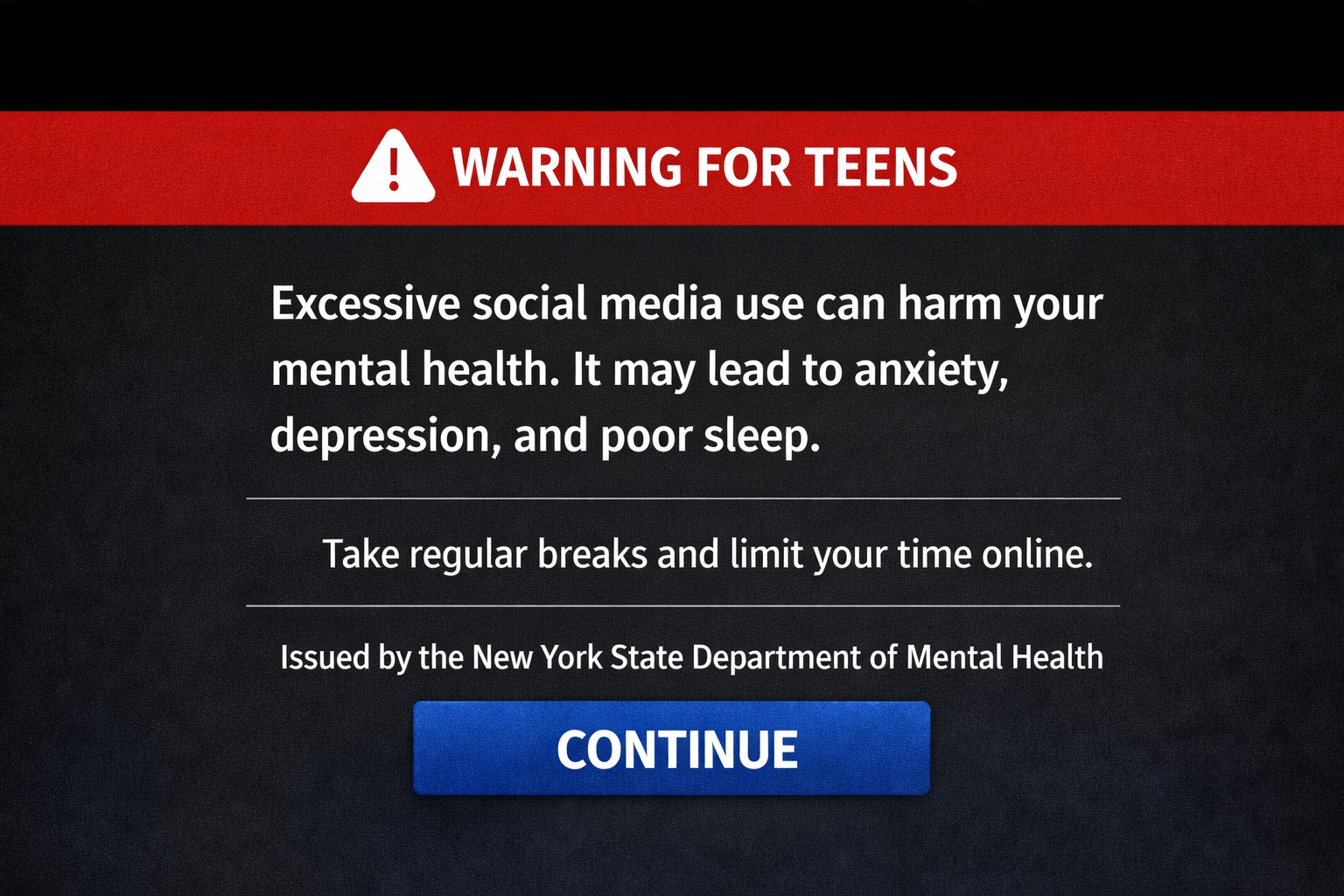

How Warning Labels Must Appear

Warnings must display directly within the user experience and remain unavoidable. Placement inside legal disclosures, account settings, or background documentation fails to meet the standard.

Regulatory guidance discussed during legislative debate outlines a tiered timing model intended to interrupt extended use.

Required Display Timing

- Minimum ten-second warning at initial login

- Minimum thirty-second warning after three cumulative hours of daily use

- Additional warnings once every subsequent hour of continued activity

Final wording will come from the Commissioner of Mental Health in coordination with public health and education agencies. Authority exists for annual updates based on emerging clinical evidence.

Enforcement Authority And Financial Penalties

Oversight responsibility rests with the New York State Attorney General’s office. Civil penalties can reach five thousand dollars per violation, with each failure to display a required warning treated as a separate offense.

Regulators designed the structure to favor compliance rather than retroactive punishment. Large platforms with high teenage usage face the greatest exposure, particularly during early enforcement periods.

When Enforcement Begins

The law does not activate immediately. Enforcement begins one hundred eighty days after the final regulations are published. Until that publication occurs, no fixed calendar date exists.

Once rules appear, the countdown becomes automatic. A regulation released early in 2026 would place enforcement in mid to late summer of the same year. Observers expect a formal public comment period that may influence technical standards and timelines.

Industry Response And Legal Outlook

Technology companies have raised concerns about operational burden and constitutional questions tied to interface design. Trade groups argue that design mandates intersect with speech protections and federal communications law.

Legal challenges remain likely once regulations are finalized. State officials appear prepared for litigation, framing the measure as consumer protection tailored specifically to minors rather than content control.

What Comes Next?

The regulatory process now becomes the central focus. Draft rules will clarify formatting standards, measurement of usage time, and acceptable warning delivery methods.

Platforms will need to prepare technical systems capable of tracking cumulative daily use for underage accounts within New York.